The distributed computing model was born as a way of dealing with the ingestion and analysis of large amounts of data as data started growing exponentially.

The 'Big Data Problem' started when one machine was not able to process or even store all the data required for a specific analysis. The solution was to distribute data over clusters of machines, thus instead of scaling vertically (getting single machines with more power), systems would eventually scale horizontally (adding more nodes to the cluster as data increase).

A key concept regarding distributed computing is the partition of data: How data are split across different (physical and logical) partitions in a distributed system may have huge impact on many aspects of a cluster, such as performance, fault tolerance and more.

Along the comparison, I'll describe shortly some key concepts regarding the architecture of each service, as they will help clarify how and why partitioning is implemented.

This comparison will hopefully clarify the similarities and differences among those four services.

Redshift is Amazon’s fast and fully managed petabyte-scale data warehouse service in the cloud.

It is a columnar, relational database that serves mainly for OLAP (Online Analytics Processing) and B.I. (Business Intelligence) purposes.

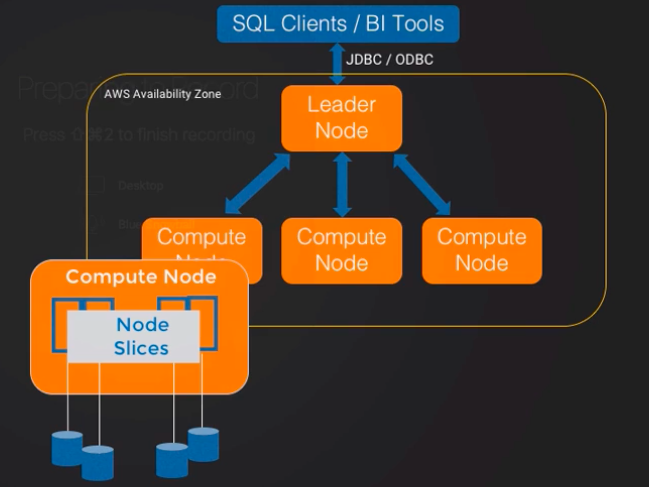

In Redshift architecture, a typical cluster consists of one “Leader Node” and many “Compute Nodes” (although a single-node cluster is also possible).

The 'compute nodes' are the ones storing the data. Data in those instances is split among 'Node slices', where each block of data has a fixed size of one MB.

Below are some of the benefits that a multiple-nodes cluster might have:

- MPP (Massively Parallel Processing): Multiple nodes execute queries in parallel

- Each node has dedicated CPU, memory and local storage

- Easy scaling (out/in and up/down)

- Backups are done in parallel

Since Redshift is used for OLAP/BI (involving aggregations and joins of data from different tables), the correct and efficient partitioning of data on each node and node slice has paramount importance and has a direct impact on the performance of the queries: ideally, each query should involve only data stored in one specific node slice in order to get maximum performance.

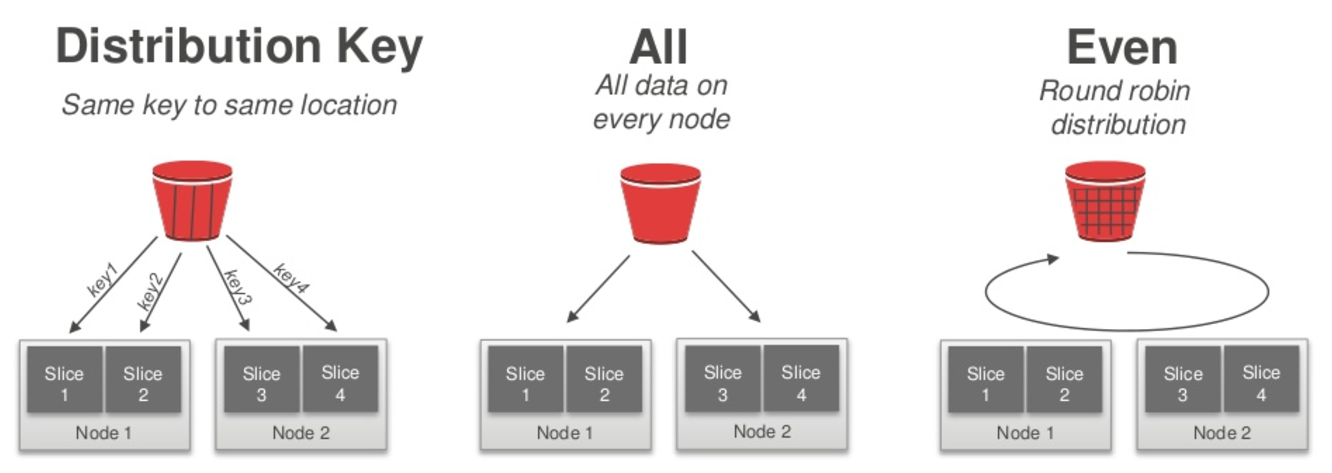

Redshift has three different options for data partitioning (called distribution styles)

- Even (Round-robin distribution)

- Key (similar to column-partitioning/sharding)

- All (similar to 'read replicas')

Distribution Keys common considerations (rule of thumbs):

KEY

– Large fact tables

– Large dimension tables

– Joins between tables based on specific keys

– Group by based on specific keys

ALL

– Medium dimension keys

EVEN:

– Tables with no joins or group by clauses

– Small dimension tables

– Neither “KEY” or “ALL” are implemented.

Furthermore, within each partition, data can be sorted in three different ways to enhance even more parallel processing:

1. Single Column

2. Compound (many columns, the order of the columns affect the sort order)

3. Interleaved (many columns, there order of the columns does not affect the sort order, e.g. equal weight is given to each column)

You can visit the following link containing detailed documentation for designing distribution keys.

Amazon DynamoDB is a fully managed NoSQL database service that provides fast and predictable performance with seamless scalability.

As per AWS documentation, in DynamoDB tables, items, and attributes are the core components that you work with. A table is a collection of items, and each item is a collection of attributes. DynamoDB uses primary keys to uniquely identify each item in a table and secondary indexes to provide more querying flexibility.

DynamoDB supports two different kinds of primary keys:

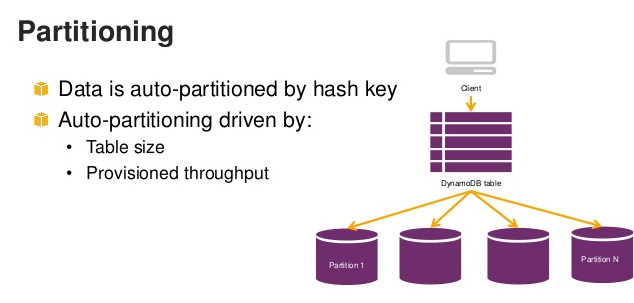

- Partition key – A simple primary key, composed of one attribute known as the partition key. DynamoDB uses the partition key's value as input to an internal hash function. The output from the hash function determines the partition (physical storage internal to DynamoDB) in which the item will be stored.

- Partition key and sort key – Referred to as a composite primary key, \ this type of key is composed of two attributes. The first attribute is the partition key, and the second attribute is the sort key. DynamoDB uses the partition key value as input to an internal hash function. The output from the hash function determines the partition (physical storage internal to DynamoDB) in which the item will be stored. All items with the same partition key are stored together, in sorted order by sort key value. In a table that has a partition key and a sort key, it is possible for two items to have the same partition key value—however, those two items must have different sort key values.

Another important DynamoDB feature that also affects partitioning is related to read and write provisioned throughputs:

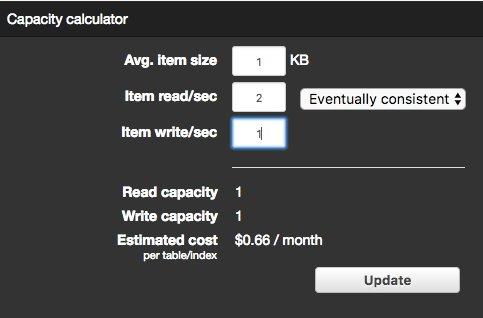

When you create a table, you specify how much provisioned throughput capacity you want to reserve for reads and writes.

DynamoDB will reserve the necessary resources to meet your throughput needs while ensuring consistent, low-latency performance.

In DynamoDB, you specify provisioned throughput requirements in terms of capacity units.

You can set up required capacity as part of the table set up or dynamically modify RCU/WCU once the table is up.

As part of the definition of a new table thru AWS Console, you can use the 'Capacity calculator' as an estimator of required capacity:

In addition, DynamoDB has two types of indexes: Local Secondary Indexes and Global Secondary Indexes.

Creating A GLOBAL SECONDARY INDEX is quite similar to creating a new table. The big advance is that DynamoDB keeps the indexes updated.

Queries must specify the exact index against which they’ll run.

Local secondary indexes live within each partition key, so they are useful only if you have a compound primary key.

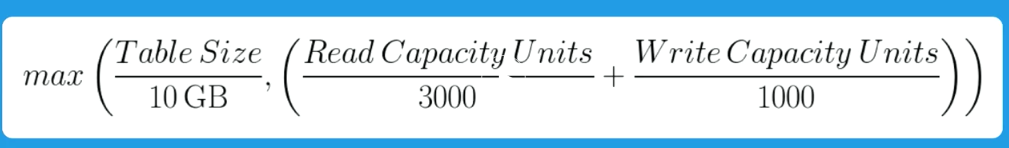

The result of the calculation is then rounded up.

For example, if a database is of 25GB size, the provisioned RCU = 15000 and the provisioned WCU = 12000, the number of partitions will be: CEILING (MAX( 25/10, 15000/3000 + 12000/1000)) = CEILING (MAX(2.5, 5+12)) = 17 Partitions.

Issues that we might face when designing provisioned capacity:

In summary, partitioning in DynamoDB is influenced by two key factors: primary keys (partition keys) and provisioned capacity. A good understanding of those factors is fundamental for getting a well-performing table.

Image source: https://www.slideshare.net/InfoQ/amazon-dynamodb-design-patterns-best-practices

In the third place, according to partition importance, I'd place AWS Kinesis Stream. It is a fully managed service that enables building custom applications that process or analyze streaming data.

It can be used for website clickstreams, financial transactions, social media feeds, IT logs, location-tracking events and more.

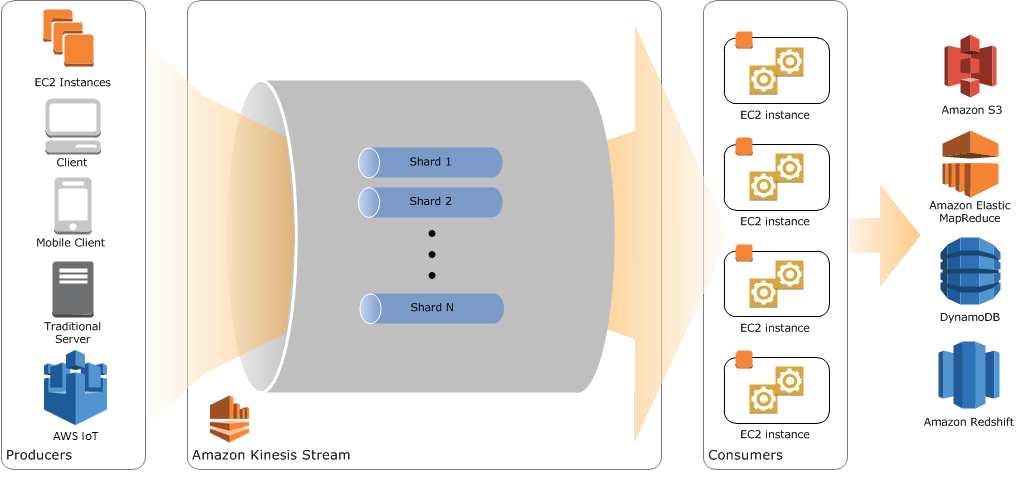

In Kinesis Streams service, we can deem a “table” entity as a Kinesis Stream, and then each stream is divided into “Shards”.

So, a shard is the parallel concept of a “table partition” , since records get distributed among the shards defined in each stream.

As with DynamoDB, the main reason for provisioning a small or large number of shards is the expected number of I/O per second, and not a certain business logic of data distribution across partitions.

But in contrast with DynamoDB, a producer application (e.g. an application that sends records to a Kinesis stream) might specify for each written record, to which specific shard it should be routed.

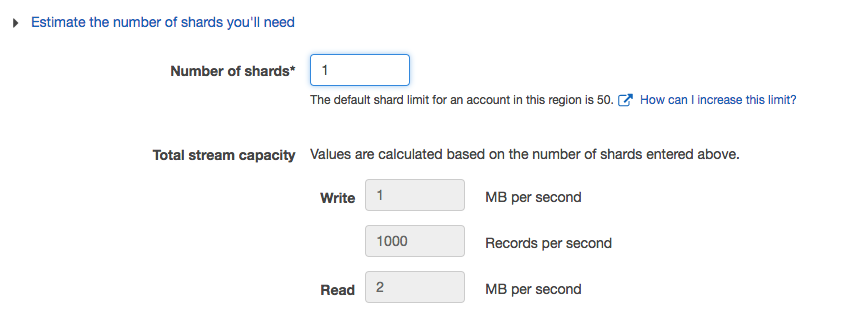

In Kinesis Streams console, you can estimate the number of shards you’ll need to provide for a specific stream (and thus estimating the number of partitions of the stream):

Similar to DynamoDB (where capacity is a function of provisioned RCU/WCU), a stream is composed of one or more shards, and each of which provides a fixed unit of capacity

Each shard can support up to 5 transactions per second for reads, up to a maximum total data read rate of 2 MB per second and up to 1,000 records per second for writes, up to a maximum total data write rate of 1 MB per second (including partition keys). This, the data capacity of a stream is a function of the number of shards that you provide.

Although consumers applications can theoretically read data from specific shard (e.g. partitions), as far as I know it is not a common usage. On the opposite, usually the whole stream is processed and only third party services might aggregate or filter data according to specific criterias. This way of working places Kinesis Stream service nearby EMR in the sense that the whole data in a stream is processed and the partitioning is meant to enhance parallel processing and support expected I/O capacities.

Following this analogy, DynamoDB should be placed between Kinesis Stream and Redshift since the partitioning of data is meant both for querying specific chunks of data (as with Redshift) but also as a way of effectively provisioning read/write throughput.

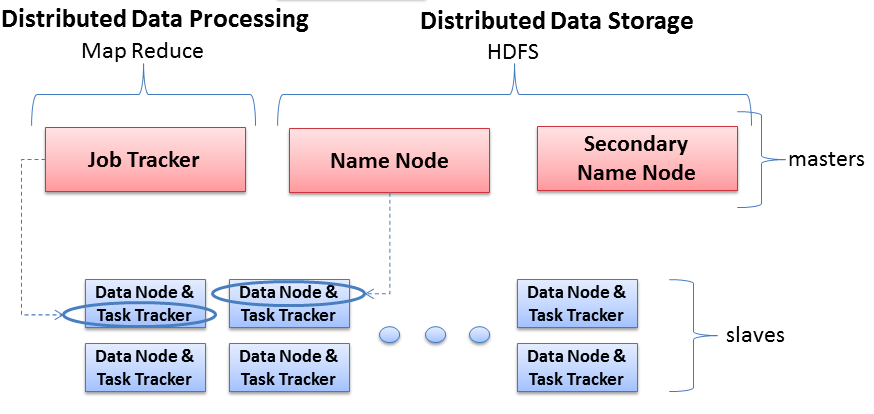

Finally, AWS EMR (Elastic MapReduce), a fully managed Hadoop cluster, is in the 'last place' regarding importance of partition keys.

EMR enables parallel analysis of large files, such as logs, web indexing, financial analysis and more.

Usually, data hosted in an Hadoop cluster will be used for a full analysis, using methodologies such as MapReduce.

Thus, usually the whole data stored in an Hadoop cluster is usually analyzed by a specific job or query.

A well known example of MapReduce implementation is counting words in a huge corpus of data: the 'huge' file is split into chunks of 64MB size (or more) among several data nodes (and replicated at least three times since the hardware is 'cheap' and prone to fail). Then each chunk is analyzed in parallel, where the basic function maps each word and creates key-value entries (for each word), where the key is the specific word and the value is 'one' for each found word. The final 'reduce' step in the job will get results from all the mappers and summarize all the key-words into one final key-value list.

As a result of this unique architecture, there is no meaning for a 'partition key' since data are split evenly across data nodes in chunks of 64MB (or higher).

The image below describes, in a nutshell, the Hadoop architecture that is behind an EMR cluster:

According to the Hadoop model, all the data is split (and replicated by default 3 times) across the data nodes.

The Master nodes oversee the two key functional pieces that make up Hadoop: storing lots of data (HDFS), and running parallel computations on all that data (Map Reduce). The Name Node oversees and coordinates the data storage function (HDFS), while the Job Tracker oversees and coordinates the parallel processing of data using Map Reduce.

The goal here is fast parallel processing of lots of data. (for a detailed explanation about the Hadoop architecture, please refer to the following post)

Summary

We can place the 4 services in a 2X2 matrix summarizing the relation between distribution and partitioning:

As a 'good old' (well, not so old) relational database, Redshift is both a distributed and partitioned service. It even offers several partitioning approaches (a.k.a. distribution styles).

DynamoDB, a NoSQL database, is distributed and partitioned, but the partitioning is much more restrict to a specific attribute in each table.

Kinesis Streams is mainly a distributed service, offering partitions (as shards) mainly as a way of managing the stream of incoming data.

Finally, EMR is a distributed service, with no 'key' partitioning mechanisms.

The material for this post is based on my knowledge and experience and also based on several sources, mainly:

- AWS documentation

- acloud.guru big data specialization

- Several other sources, cited across the post.