Classification of Yelp User's reviews

Introduction

The purpose of this project is to compare between a 'basic' sentiment analysis algorithm and a more elaborate one.

The algorithms were tested thru the 'reviews' done by users (mainly for restaurants) as they were supplied by Yelp for 2016's challenge ('Round 8') in the 'business' JSON dataset.

In this dataset, there are two main fields of interest for this project: 'text' which contains a (qualitative) review by a user for a specific business, and 'stars', which is a numeric ranking (ranging from 1 to 5), usually meaning a quantitative feedback.

The output of both algorithms (the benchmark and the advanced one) was a classification of each review by one of three possible sentiments: 'Positive', 'Neutral' or 'Negative'.

Validating the results: The validation of each classification was done by using the 'stars' that the user gives the business being ranked, along with the review.

The underlying interpretation of the connection between qualitative and quantitative feedbacks states that a negative review one or two stars, a neutral one equals three and a positive review equals four or five stars. Of course, this interpretation is subjective and may differ from user to user, thus creating some discrepancies in the validation of the results.

The Analysis

Part 1 – The 'basic' algorithm.

The basic and relatively fast performing algorithm that I implemented as the benchmark works as follow:

- Read a lexicon containing positive and negative words – the list of words is based on Hu and Liu's lexicon

- For each review, create a corpus using the following transformations:

- convert all words to lowercase

- remove punctuation

- remove 'english' stop words

- Count the negative and positive words in each corpus.

- Subtract the negative from the positive sums: If a sum < 0 then the review is negative. If a sum > 0 then the review is positive. Otherwise classify as a neutral review.

The classification done by the algorithm is compared against the ranking that each user gave, together with the review.

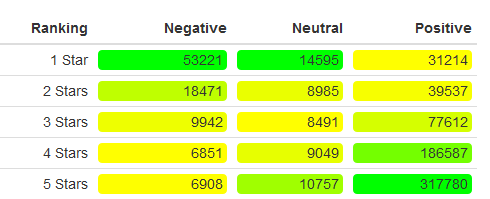

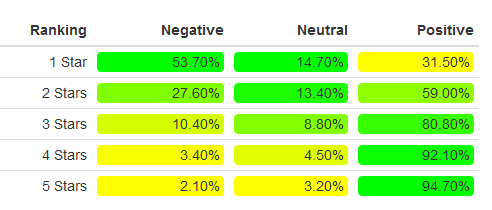

After running a sample of 800,000 reviews, the basic algorithm's results are as follows (the colored heatmap should be read column-wise: the green thru yellow coloring is per column, going from the highest to the lowest values):

According to the above tables, around 40.6% of Negative reviews are recognized correctly (1 and 2 stars), and 93.4% of positive reviews are also recognized properly (4 and 5 stars).

The execution of the analysis was performed on an m4.4xlarge EC2 instance, and took about one hour and 40 minutes.

Part 2 – The 'advanced' algorithm.

Several aspects of natural language analysis are handled by this algorithm, including expressions, negation words, words written in uppercase, ambiguous words, etc.

In addition, certain tweaks can be applied to the algorithm by assigning different 'weights' to different parts of the analysis. For example, if someone writes that a place was AWFUL, the 'calculated' meaning can be 'negative word' * 2 (e.g. twice as negative as just 'awful').

The following weights were set for the current analysis:

- 'negations weight' = 1

- 'uppercase weight' = 5

- 'confirmation weight' = 3

Without going into too much detail, the advanced algorithm implements the following flow:

- Creating a corpus for each review.

- Since some stop words change the meaning of the review, prior to their removal, some expressions are checked and replaced by their meaning (e.g. 'good' or 'bad').

- Ambiguous words, such as 'like', can be part of the subject and/or predicate of a sentence.

- Handling expressions and replacing them with the words 'good' or 'bad', to simplify the upcoming analysis.

- Counting positive and negative words and calculating the current sentiment

- 'Negation check': For each positive or negative word found, a negation word (such as 'no', 'not'), placed one or two positions prior to the positive/negative word itself, is looked up. If such a word is found, a recalculation of sentiment is performed, changing the value from positive to negative and vice versa.

A common practice in natural language analysis is the creation of n-grams: 2-grams meaning all possible combinations of two consecutive words, 3-grams meaning all possible combinations of three consecutive words, etc.

I preferred the current method (of search by index), for performance reasons.

- 'Confirmation check': Words such as 'so', 'too', etc., prior to a positive or negative word, tend to express a stronger feeling, so if such words are found (and according to the pre-set weight), a new calculation of the sentiment is performed.

- 'Uppercase check': Similar to the 'confirmation' logic, if a word is written in uppercase, its meaning is emphasized, so according to the pre-set weight, another re-calculation is done in such cases.

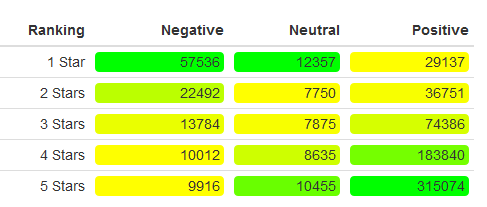

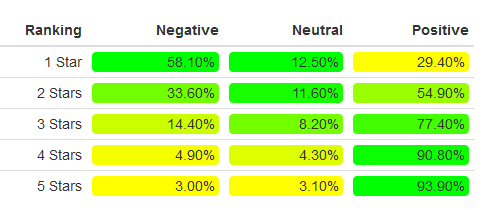

After running a similar sample of 800000 reviews, the advanced algorithm's results are as follows:

According to the above tables, around 46% of Negative reviews are recognized correctly (1 and 2 stars), and 92.3% of Positive reviews are also recognized properly (4 and 5 stars).

The execution of the analysis was performed on a m4.4xlarge EC2instance, and it took about three hours and 50 minutes.

Conclusion

The 'basic' model, as it sometimes happens with 'naïve' models, performs relatively well when applied to Yelp's corpus of reviews, at least when classifying Positive and Neutral ones.

The 'advanced' model offers certain improvements classifying mainly negative reviews, but generally it appears that upset or disappointed customers tend to be more 'poetic' (e.g. tend to use more irony, sarcasm and cynicism) when writing a negative review, which makes classification more complicated.

Further analysis, mainly of negative reviews, is required in order to enhance the recognition rates. Also, some performance tuning should be done so the advanced algorithm can be executed faster than its current execution time.

Final Note

A common methodology implemented in text analysis involves creating a Document/Terms matrix, having each document as a row and each column being a word in the document (hence the values in the cells representing the frequency of each word on each document).

Based on such matrices, different machine learning models are implemented, thus trying to learn and predict from the whole set of documents the possible outcome (in our case, classifing each review as positive, neutral or negative).

This methodology is sometimes more time and memory-consuming.

The methodology implemented in this current analysis handles each document as an isolated case, being more time and processing effective, but missing possible insights from the analysis of a huge set of documents as a whole.

Each methodology has pros and cons, and should be implemented according to the requirements and limitations of the project.

Technical Information

- The whole project can be fully reproduced. The source code can be found under https://github.com/AviBlinder/SentimentAnalysis

- Some rounds of the project were performed on AWS instances (M4 family) and based on a private RStudio AMI, equipped with 'git' integration, thus allowing an ongoing development and testing process.

Running on an m4.4xlarge EC2 instance, the analysis of 800000 reviews took around 3 hours and 50 minutes. Since the execution was with 'spot instances', and the cost per hour was less than 20 cents, the total cost being less than 80 cents….